- Well Wired

- Posts

- ChatGPT Health Is Here, But Is Your Wellbeing in Safe Hands? ⚠️

ChatGPT Health Is Here, But Is Your Wellbeing in Safe Hands? ⚠️

And Could AI Really Predict Future Killers Before Crimes Happen?

Welcome back Wellonytes 🧠⚡

This week’s Well Wired peers into the unsettling shift happening beneath the surface: AI isn’t just shaping your choices anymore, it’s shaping your self-trust. We also look at the cancer-hunting AI accelerating drug discovery and the big, bold promises made by ChatGPT Health. 🩺💻

From creating better focus to scanning your future crimes (yes, really), we’re exploring the razor’s edge between breakthrough and boundary. And if your emotions feel stuck? We’ve also got an AI trick for translating body sensations into movement and clarity.

And of course, remember that Well Wired ⚡ ALWAYS serves you the latest AI-health, productivity and personal growth insights, ideas, news and prompts from around the planet. We’ll do the research so you don’t have to! ❤️

Well Wired is constructed by AI, created by humans 🤖👱

Todays Highlights:

🗞️ Main Stories AI in Wellness, Self Growth, Productivity

💡Learning & Laughs AI in Wellness, Self Growth, Productivity

💡AI Tip of The Day (Want to be More Focused? Stop Screwing Around)

⚡Supercharge + Optimise 🔋 (AI tools & resources)

📺️ Must watch AI videos (The Clinicians Who Code 🧠 💻)

🎒AI Micro-class (Use AI to Turn Body Sensations into Emotional Movement)

📸 AI Image Gallery (Fantastical Fruitlings)

Read time: 6 minutes

💡 AI Idea of The Day 💡

A valuable tip, idea, or hack to help you harness AI

for wellbeing, spirituality, or self-improvement.

Self Growth: If You Want to be More Focused, Stop Screwing Around. 💡

Your inability to focus isn’t a personal discipline problem.

You’re simply designing problems you keep trying to solve with grit, willpower and good intentions.

You tell yourself to “try harder”, “lock it in”, or “be more consistent”, while your environment quietly sabotages you from every angle.

Endless pings and notifications, poor transitions, low energy, vague priorities ; all left untouched.

What you don’t realise is that it isn’t a willpower failure.

It’s quite simply, bad internal and external architecture.

If you want better focus, the solution isn’t to white-knuckle your way through every distraction. The real goal is to stop making focus unnecessarily hard.

✅ Choose to design your environment for success; that way you make it evn easier to start instead of over relying on willpower alone. Then make it even easier by redesigning with AI.

Prompt: Environment for Effortless Action

[Start Prompt]

Act as my environment design strategist.

Based on my primary goal right now, analyse my physical, digital, and social environment and identify what currently creates friction or distraction.

Then:

List what I should remove, reduce, or hide to make starting easier

Suggest one environmental redesign that lowers effort to near zero

Show how I can use AI to automate, pre‑decide, or remove decision points

Focus on making the right action the default, not the heroic one.[End Prompt]

✅ Choose energy management over time management to give you the juice to get what you need to get done. Work out your specific energy signature is by harnessing AI.

Prompt: Personal Energy Signature Mapper

[Start Prompt]

Act as my energy management analyst.

Using my daily rhythms, recent workload, sleep quality, stress patterns, and focus levels, help me identify my personal energy signature.

Then:

Identify my high‑energy, low‑energy, and recovery windows

Match the right type of work to each energy state

Highlight what drains energy unnecessarily

Suggest one simple adjustment that would immediately increase usable energy

Optimise for sustainable output, not maximum hours.[End Prompt]

✅ Choose clarity before action over busywork so you focus on your most precious goals earlier, rather than making them an add-on. Use AI to get clear and subtract before adding anything else.

Prompt: Clarity & Subtraction Filter

[Start Prompt]

Act as my clarity and prioritisation guide.

I’ll list my current goals, projects, and obligations.

Your task is to:

Identify what is urgent vs important using Eisenhower logic

Highlight what should be paused, removed, or deprioritised

Clarify the one focus that deserves early attention, not leftover time

Rewrite my priorities as subtraction rules rather than added tasks

Do not give me more to do.

Help me see what to stop so focus can emerge.[End Prompt]

AI + Awakened Action

Habits stick when friction drops.

AI’s job is friction removal, not motivational speeches.

Focus doesn’t come from motivation, it comes from inspiration.

It comes from removing friction in the right places.

When your environment supports clarity, you respect your energy and your actions follow your acknowledgement of self.

That’s when habits stop feeling like a fight.

They start feeling obvious.

That’s where AI earns its keep.

Not as a faceless cheerleader.

Not as a techno-bot Tony Robbins.

But as a quiet systems assistant that smooths the path so your best behaviour unfurls and no longer needs heroic effort.

Lower the friction.

The rest takes care of itself.

🗞️ On The Wire (Main Story) 🗞️

Discover the most popular AI wellbeing, productivity and self-growth stories, news, trends and ideas impacting humanity in the past 7-days!

Self Growth 🧠

AI Hasn’t Made You Smarter, But it May Have Made You Stop Trusting Yourself.

How reliance on artificial intelligence is reshaping your confidence, agency and attention…

A computer screen sitting in a beachside room

“Every AI shortcut saves you time, but what else could that cognitive laziness be stealing at the same time?”

You start with a harmless shortcut; summarise this, rewrite that, give me options. Then you notice a new reflex. Before you feel your own answer, you reach for your keyboard, you punch in a prompt.

Psychology Today frames this as AI dependence; the slow outsourcing of judgement, memory, creativity and emotional regulation to a system that is always available and rarely disagrees.

I’m not talking about a Terminator rant about the machines “taking over”. No what I’m talking about is the wholesale rental of your cognition and critical thinking to a machine, because “it’s easy and quick”.

Quick sedge way.

AI must always be treated as a technological teammate, not as a tool to replace thinking and, therefore, as a crutch; treat it as such…

The Quiet Slide: Tool → Crutch 🧠

Dependence isn’t dramatic.

It looks like efficiency.

Why wrestle with a decision when AI can rank your choices?

Why sit with uncertainty when a model can hand you neat wording?

Repeated reliance can chip away at your cognitive confidence. If you routinely externalise decisions, you start doubting your own judgement, even in areas where you used to feel confident and solid.

Over time, your inner voice quietens down because it’s not getting the reps.

Emotional Outsourcing 🤝

The stickiest part is emotional.

AI doesn’t get tired, awkward or impatient. For stressed or lonely users, that computer confidence and consistency can feel like stability.

But self-soothing is a skill.

If reassurance and perspective always come from outside you, your resilience thins fast. Discomfort becomes something to remove, not something to metabolise internally.

A calm reply can become a sedative.

What AI Is Doing 🤥

Models don’t “know” what’s best for you.

They predict language that fits patterns. They don’t notice when consultation becomes compulsion. They don’t carry responsibility for the meaning you attach to the output.

Fluency adds a false sheen of authority, especially when you’re tired.

For example I asked ChatGPT, “How do you, as an AI, predict language that fits patterns, and what patterns do you use?”, here is the AI’s response.

When you look at that response, you get a sense of clarity and confidence that the answer is correct and consistent with your question.

And in some ways it is, but AI can also make mistakes and it often does hallucinate, sometimes horribly.

For example, a medical AI was documented inventing a non-existent body part during a medical summary, while another famously suggested adding non-toxic glue on pizza.

Long story, short… Always train your ability to critically think about AI’s answer before taking it as verbatim.

OK, so what do you do about it?

“When answers arrive too easily, your inner voice forgets how to speak up.”

#AI #HumanAI #CriticalThinking #PhilosophyOfTechnology #CognitiveBias #WellWired

How to Use AI Without Losing Your Edge

You don’t need to go on a total AI digital detox and move to a farm. What you do need are boundaries that keep your brain and mental agency in the loop:

Answer first, prompt second. Write your own take in 60 seconds, then use AI to refine or stress-test it.

Create no-AI zones. Keep certain decisions, drafts and emotional check-ins fully with you or another person.

Delay reassurance. When you want comfort, wait five minutes, breathe, journal a few lines, then decide if you still need the prompt.

Track your triggers. Notice the moments you reach for AI: fatigue, conflict, loneliness, overwhelm. Those are your training opportunities.

Key Takeaways 🧩

Convenience can quietly become reliance.

Over-reliance weakens confidence in your judgement.

Emotional outsourcing can thin resilience.

AI predicts plausible text; it doesn’t safeguard your agency.

Boundaries keep the tool useful and the mind trained.

If clarity arrives instantly, pause.

The point isn’t to never use AI, the point is to stay as the author of your own decisions and desires, rather than always relying on data first.

Why It Matters 🔍

Remember that you’re not simply using AI to save time, you’re shaping your habits and thoughts. When you remove effort, you remove trust in your own processes, ideas and creativity.

And trust is what allows you to act without permission.

It’s what opens you up to those rare sparks of creativity.

Used consciously, AI can sharpen thinking.

Used reflexively, it can soften and smudge it.

You don’t need to choose between human or machine intelligence. You simply have to decide where each belongs.

If a tool always answers first, your inner voice will never get a turn.

And in todays AI-powered planet, that voice still matters more than you think.

“What you don’t practise, you slowly forget you ever had.”

What You Can Do 🧠

Notice when prompts replace pauses.

Ask yourself what you’d say without assistance.

Let discomfort last longer than convenience.

Use AI as a collaborator, not as a compass.

Technology can support your thinking and brainstorming.

But it can’t replace the slow work of being confident in your own unique way.

If clarity arrives instantly, wait.

The mind grows stronger in the moments you choose not to outsource.

“Agency isn’t lost in a single decision. It fades when you stop making them.” 🤔

Wellness 🌱

ChatGPT Health Is Here, But Is Your Wellbeing in Safe Hands? ⚠️

Medical experts are worried about the recent release of ChatGPTs new health AI chatbot; should you?

An Open AI robot researches medical books at a desk

“The more confident the answer, the more important it is to ask who’s responsible for the outcome.”

Last week we chatted about how the latest Google AI Overviews have been giving misleading health advice to people all over the world (read more here); so it’s no wonder that ChatGPT’s new health AI feature has experts worried.

A new AI health advice feature is rolling out in Australia and around the world, and medical experts warn that it might be too soon to trust what it says about your body and brain.

An interface called ChatGPT Health now lets you ask wellness questions, interpret test results and link medical records or wellness apps to get personalised replies.

And while it sounds like a positive step toward clearer health guidance, beneath the magical promise lies the inevitable questions about safety, regulation, data privacy and the very nature of medical advice in an age of silicon intermediaries.

Medicine, Information and Misleading Certainty

Let me paint a picture…

You’ve probably asked a search engine about symptoms before.

What you might not have noticed is that some of those AI‑generated health summaries were dangerously wrong!

AI has been caught advising people to avoid vital nutrients, misinterpreting liver test ranges, or giving incomplete context for serious conditions.

I’m not saying that ChatGPT Health will do the same, but it’s so new that we simply don’t know. And remember, it isn’t operating in isolation. It is entering a health landscape where people like you probably already use chatbots for health queries at massive scale; millions of times a week.

And if you think that means trustworthiness, be cautious because the system isn’t regulated as a medical device, nor is it subject to mandatory safety testing or reporting to medical bodies or boards.

One early incident shows you what’s at stake.

A man in Australia ended up in hospital with hallucinations after acting on AI‑suggested advice to use an industrial compound as a dietary substitute.

Think about it…

Your health query starts innocently enough: “What’s this rash on my thigh?” turns into “Am I dying of the Shingles?” and before you know it, your AI has you convinced you’ve got three rare disorders and a ticket to the afterlife.

That may sound idiotic and extreme, but it shows a simple reality; while a chatbot isn’t a GP, it does speak with unnerving confidence. And that confident language can feel like competent guidance, even when it’s not.

OpenAI claims to have ironed out the kinks by working with hundreds of clinicians globally in building this tool. The company also highlights it’s focus on data privacy protection.

However, critics point out that where general information ends and medical advice begins isn’t obvious to the average user.

So Whats Really Going on With AI Health Advice?

Health advice isn’t like other search results.

When you ask a chatbot about a sore throat or a rash, you’re not just satisfying curiosity; you’re making decisions about your body, behaviour and possibly your future health.

When AI speaks confidently about treatment strategies, dosages, diet options or risk factors, you naturally give it weight. That’s not irrational, it’s human. But the conversational fluency of an AI doesn’t guarantee clinical accuracy.

In several studies, even widely used chatbots have delivered unsafe responses to medical questions. And they’ve done so with alarming frequency.

It’s like asking your overly confident vegan mate for medical advice; the one who once googled “detox” and now thinks cumquat juice cures everything.

Except this friend is available 24/7, never doubts itself and doesn’t get sued when you end up in the emergency department.

And here’s the thing, the issue isn’t about the occasional glitch or two.

It’s structural.

These models are trained on patterns of information from the internet.

They aren’t equipped with the deep context, physical examination, nuance and judgement that trained clinicians bring to every diagnosis and treatment plan.

At least not yet.

This is an issue when people, particularly those in vulnerable situations, take AI responses at face value, delay seeking the right care, or act on incomplete or incorrect information.

And that can have disastrous consequences.

“The tools you trust shape the risks you don’t see.”

“Technology accelerates questions. Wisdom decides which ones are worth asking.”

#AI #AIHealth #DigitalHealth #DigitalWellbeing #HealthByDesign #PreventativeHealth #ChatGPTHealth

From Helpful Assistant to Unregulated Advisor

Some healthcare experts see the benefit in AI helping you prepare for your appointments, understand medical jargon and organise your own records. After all, healthcare is complex and opaque.

But AI’s potential can only turn into progress when clear and robust safety nets are put into place first.

At the moment, there is no requirement for independent, peer‑reviewed evaluation of ChatGPT Health’s performance. There is no legal obligation to report risks, nor a regulatory framework defining what counts as acceptable accuracy or harm.

That leaves you in a grey zone where tech companies can define the norms faster than governments and health systems can adapt.

In other words, you’re test‑driving a health adviser that hasn’t been through the crash tests yet; smooth interface, confident answers and a safety manual still being written.

That may benefit those with resources and medical literacy; it may leave others exposed to confusion, misdiagnosis and digital reliance masquerading up as personalised healthcare.

“Tailored medical advice is only powerful if someone’s accountable for when it’s wrong.”

Key Takeaways 🧩

AI health chat features can feel personalised but remain unregulated and untested in clinical settings.

Confident language from an AI is not the same as accurate medical judgement.

Cases of misleading advice show how easily risk can be amplified.

Tools may help organise information, but they can’t replace nuanced clinical assessment.

Governance, transparency and consumer education are critical before these tools scale further.

Why It Matters 🔍

If you treat these tools like search engines, you’re missing the shift; they speak as if they understand you, your body, brain, and your history.

But fluency isn’t understanding.

A machine can compose reassuring prose, cite data and frame probability; without truly knowing your situation.

That structural gap matters because health diagnosis and decisions are high stakes. When you’re tired, worried or in pain, nuanced judgement is what protects you.

AI doesn’t worry.

It optimises for confidence.

“The more fluent the machine, the easier it is to forget it doesn’t truly care.”

Final Thoughts 💤🧠

Let me be clear, I’m not anti-AI when it comes to harnessing this technology for your wellbeing and self growth; quite the opposite. It’s the whole premise of this newsletter.

However, what I am examining is where trust lives in a system that speaks convincingly but doesn’t shoulder responsibility…

…through no fault of it’s own.

AI has massive promise in supporting health literacy and easing information overload.

It can help you prepare questions for your clinician, organise data and make sense of unfamiliar medical terms. But it should never be a surrogate for clinical insight.

Used well, AI can illuminate confusion.

Used unwisely, it can amplify it.

The goal isn’t to replace humans with machines.

It’s to build a hybrid; where technology supports insight, not overrides it.

That begins with using AI not just for speed or scale, but for self-awareness, wellbeing and depth.

Tools that remind you to be more human, not just more efficient.

But let’s not confuse a helpful silicon sidekick with a stand-in surgeon. Just because a chatbot knows your iron levels doesn’t mean it should be picking your treatment plan like it’s choosing toppings on a burrito bowl.

If technology is going to be part of your health journey, the least we can do is to train it wisely to make it clear to you, the user, what it is and what it isn’t.

Your health deserves judgement that isn’t just well phrased.

“AI medical advice isn’t wisdom. And clarity without context is just noise in a better font.” 😴 💭

Smart Investors Don’t Guess. They Read The Daily Upside.

Markets are moving faster than ever — but so is the noise. Between clickbait headlines, empty hot takes, and AI-fueled hype cycles, it’s harder than ever to separate what matters from what doesn’t.

That’s where The Daily Upside comes in. Written by former bankers and veteran journalists, it brings sharp, actionable insights on markets, business, and the economy — the stories that actually move money and shape decisions.

That’s why over 1 million readers, including CFOs, portfolio managers, and executives from Wall Street to Main Street, rely on The Daily Upside to cut through the noise.

No fluff. No filler. Just clarity that helps you stay ahead.

Quick Bytes AI News⚡

Quick hits on more of the latest AI news, trends and ideas focused on wellbeing, productivity and self-growth over the past 7 days!

Key AI Wellbeing, Productivity and Self Growth AI news, trends and ideas from around the world:

Wellness: A New Breed of AI Hunters Find Fast Cancer Cures

Summary: Advanced machine learning models are helping scientists screen thousands of compounds to find promising CDK9 inhibitors; a class of molecules that may help switch off cancer’s survival signals.

Although AI isn’t curing cancer just yet, it’s giving researchers a much bigger head‑start. Watch this space!

Takeaway: If medicine had a search engine, this is what it would look like; thousands of drug leads pulled from deep complexity and simply handed to scientists to validate like lost keys found in a sofa. 🔬🤖

Wellness: From Watson to ChatGPT Health; Why This Time Feels Different

Summary: Like me, you’re probably watching how AI is stepping into healthcare again, but this time with more advanced, later generation tools and clearer lanes.

OpenAI is going direct to patients with ChatGPT Health, while Anthropic is schmoozing hospitals and research institutions. Same destination, very different doors. 🏥

Takeaway: The shift isn’t focused on replacing doctors, but about who controls medical information. AI’s real power lies in translation, not diagnosis. However, privacy and trust will decide how far it’s allowed into the inner ‘medical’ circle. 🏥🤖

Wellness: AI‑Generated Virtual Psychedelics Could Expand Mental Health Tools

Summary: Researchers are proposing a bold bridge between digital tech and therapy by using AI and virtual reality to create psychedelic‑like experiences that mimic the effects of traditional compounds without drugs.

Rather than ingesting a molecule, users could undergo immersive, AI‑driven simulations that engage the brain in altered states used in therapy research.

Takeaway: If mental health care is stuck between slow‑moving regulation and promising but risky drugs, digital “cyberdelics” might offer a way to tap the therapeutic part of psychedelic experiences without the chemistry. 🌐🧠

Wellness: AI Fuels a New Era of Medical Self‑Testing

Summary: AI is powering a wave of self‑testing tools that could make checks for major diseases more accessible outside clinics. Going beyond smartwatches, these systems analyse biomarkers and health data to flag issues earlier, shifting healthcare from reactive care to proactive awareness.

Takeaway: With AI making self‑testing easier and more accurate, you might soon spot health risks before symptoms hit; giving you a chance to act earlier and take control of your health rather than waiting for a doctor’s appointment. 🩺💡

Productivity: Why We Create “AI Workslop” And How to Stop It

Summary: With the advent of AI, more and more professionals are churning out messy, half‑baked AI content, “workslop”, that feels and looks productive but isn’t.

But how do we stop it when so many people are using it and does burgeoning use give us some insight? I think it does. What I’m seeing today is that AI doesn’t fix sloppy thinking; it highlights it.

Takeaway: If you don’t define the problem clearly, AI will happily ramble in circles; like asking a sous‑chef to cook without a recipe and blaming the potatoes. 🥔 🔄

Productivity: Using AI as Your Digital Assistant

Summary: AI assistants are creeping into our routines, even at night, and what that means for focus, sleep and work‑life tension is being researched by the brightest minds on the planet.

It is one thing getting bots to take over your tasks it’s another allowing them to shape your mental space. Dr Nici Sweaney, CEO of AI Her Way and Petr Adamek, CEO of the Canberra Innovation Network are exploring this very question.

Takeaway: Just because something can answer your every thought confidently doesn’t mean it should. True clarity comes from prioritising what matters, not from more tech-based noise. 📱🌙

Self Growth: Could AI Really Predict Future Killers Before Crimes Happen?

Summary: Remember the Phillip K Dick written film, Minority Report? Well new research ponders whether AI tech might one day forecast violent behaviour. And what would it mean to use psychology and data to predict who might cause harm?

It’s an eerie Black Mirror-esque idea that could one day become a reality

Takeaway: Predictive insight seems on the outset to be smart until it starts treating you like a probability, not a person. This is a reminder that care always needs context, not just correlation. 🧠 ⚖️

Self Growth: In the AI Age, Human Advantage Matters More

Summary: The McKinsey health institute thinks that machines won’t replace humans; but humans who harness AI thoughtfully will outpace those who don’t. Skills like judgement, empathy and creativity still define progress, even with AI help.

“The rise of artificial intelligence highlights how investment in “brain capital” (brain health and brain skills) can boost resilience, productivity, and growth.”

Takeaway: Don’t think about yourself as competing with AI, but see yourself as competing with other humans using AI. Your edge won’t be how advanced your AI-powered tech is; it’ll be how your mind deciphers which problem to solve and how. 🧠 🚀

Other Notable AI News⚡

Other notable AI news from around the web over the past 7 days!

When it comes to wellbeing, how does AI help or hurt patients?

How Psychologists are analysing the AI chats of their patients

AI can help good leaders be better, but it will never replace them

AI generated pop song hit banned from Swedens official charts

This Canberra lawyer is using AI to war-game world problems

Viral native Australian animal stories TikTok star doesn’t even exist

⚡ AI Tool Of The Day

Each week, we spotlight one carefully chosen AI tool designed to sharpen your health, steady your mind, or unclog your workflow. These aren’t flashy novelties or dopamine toys; they’re quiet operators doing useful work in the background.

One tool. One subtle shift. A slightly more intelligent way to live or lead. 🧠✨

Wellness: Nutrino (by Medtronic)

Use: Nutrino is an AI-powered nutrition intelligence platform used in clinical and chronic health settings to analyse dietary patterns, glucose response and metabolic health.

AI Edge: Nutrino connects food intake with physiological data, helping clinicians and patients understand how meals actually affect the body; not in theory, but in lived biology. The system learns patterns over time, flagging nutritional choices that quietly destabilise health long before symptoms appear.

Best For: People managing chronic conditions, clinicians working with metabolic health, and anyone who wants nutrition advice grounded in data rather than diet trends.

Why it’s nifty: It doesn’t moralise food or shout about willpower. It simply shows you what your body does in response to what you eat, then lets clarity do the heavy lifting.

Productivity: Fathom

Use: Fathom is an AI meeting assistant that records, transcribes and extracts action items from video calls; without turning every conversation into a memory test.

AI Edge: It automatically highlights decisions, follow-ups and key moments, then organises them into clean summaries you can actually use. No frantic note-taking. No “wait, what did we decide?” emails.

Best For: Knowledge workers, founders and teams who want meetings to produce movement, not mental residue.

Why it’s nifty: Fathom listens so you don’t have to. You stay present in the conversation, then walk away with clarity instead of cognitive lint.

Self Growth: Happify Health

Use: Happify Health delivers AI-personalised cognitive behavioural pathways designed to build emotional resilience, reduce stress and improve mental wellbeing over time.

AI Edge: The platform adapts exercises and reflections based on how you respond, nudging you towards patterns that genuinely shift mood and behaviour rather than generic advice loops.

Best For: Anyone wanting structured emotional support that fits into daily life without turning growth into a second job.

Why it’s nifty: It doesn’t ask you to fix yourself. It quietly trains your nervous system to respond differently, one small pattern at a time.

AI wellbeing tools and resources (coming soon)

📺️ Must-Watch AI Video 📺️

🎥 Lights, Camera, AI! Join This Week’s Reel Feels 🎬

Wellbeing: The Clinicians Who Code 🧠 💻

What it’s about: Logan Nye, a Harvard-trained physician, makes a simple but unsettling point; most medical AI fails because the people building it don’t fully understand medicine and the people practising medicine don’t know how the code works.

In this TEDxBoston talk, Nye argues for a new kind of clinician; the doctor who can diagnose and debug.

Not to replace data scientists.

Not to turn doctors into programmers overnight.

But to close a dangerous translation gap where life-critical tools get built without lived clinical context.

💡 Idea: What if the safest AI in healthcare isn’t built for doctors, but by them? When one mind holds both medical intuition and machine logic, errors marginally shrink and insight sharpens.

🌍 At scale: Hybrid clinician-coders could radically speed up innovation, which could mean fewer misunderstandings, faster deployment and better alignment with real patient needs.

⚙️ AI Edge: Deep learning guided by clinical judgement. Models trained not just on data, but on bedside reality.

🧠 Best for: Clinicians curious about AI, builders working in health tech and anyone wondering who should be trusted to design intelligence for bodies.

“The future doctor may not just read scans; they’ll read the code that interprets them.”

🎒 AI Micro Class 🎒

A quick, bite-sized AI tip, trick or hack focused on wellbeing, productivity and self-growth that you can use right now!

Wellbeing: Somatic intelligence: Use AI to Turn Body Sensations into Emotional Movement 🧠🦵

A Tai-Chi Robot training humans

Your Body Already Knows What You’re Avoiding

Hello Wellonyte,

If you’ve ever sat with a tight chest, furrowed jaw or inexplicable low‑level tension and wondered, “What emotional pothole did I drive over?”, you’re not alone.

Most of us live in a tug‑of‑war with feelings we can’t name and that’s exactly where avoidance likes to live.

What I’m proposing today won’t make your emotions vanish.

What it will do is teach you how to use your body as a compass and AI as a mirror, to notice sensation, name hidden emotional drivers and then release them through simple, tailored movement.

And no we’re not going to immerse ourselves in eastern mysticism, nor will we apply vague wishy washy coaching techniques.

Instead we’ll apply thoughtful, human‑centred insight with a tech‑assisted twist. 🌀

Let me explain…

Have you ever noticed how stress in your shoulders feels different to anxiety in your stomach?

That’s because your body already knows what your mind glosses over.

Somatic intelligence isn’t an underground mystical idea, it’s a scientific fact that the body stores, rehearses and holds emotional patterns in a way that speech alone can rarely reach.

It’s the nature of trauma and chronic stress to bypass the thinking mind.

Instead of becoming tidy narratives, unprocessed experiences lodge themselves in muscles, breath patterns and reflexes; a clenched jaw here, a shallow breath there, a nervous system stuck in the “on” position even when the danger has long passed.

This is why trauma survivors may not remember what happened, but their bodies react as if it’s still happening.

The body keeps score not out of malice, but because it’s trying to protect you; even if the memory is implicit, unspoken and stored as sensation rather than story.

Now imagine your nervous system as a radio tuned to your life frequency.

But if all you ever do is think about your world, you’ll never switch the dial to the station your body is broadcasting.

And this is where AI can help.

You can harness it to become your somatic receiver; like a mirror you can hold up not to your identity, but to your raw sensations.

When used wisely, AI can help you listen more deeply. Not to fix, analyse or optimise, but to witness what your body has been trying to say for years; patiently and without words.

“The body remembers what the mind forgets, movement is how it tells the truth.”

Your Emotions Are a Barometer to Your Evolution

Our culture has trained us to avoid discomfort in the mind while the body quietly accumulates tension.

Research in affective neuroscience shows that unprocessed emotions don’t disappear, they amplify physiological stress markers like increased heart rate, muscle tension and disrupted breathing patterns.

A 2024 study in Psychology Today noted that people who regularly label and move through bodily sensations report greater emotional regulation and lower anxiety.

You’re not so much forcing calm as you’re listening correctly.

Here are a few metaphors to make sense of it:

Emotions as water in a riverbank. When the flow is blocked — jaw, chest, belly — pressure builds until there’s a shift, sometimes dramatic, sometimes gentle.

Avoided emotions are unread emails. You see the count rising, but you ignore it. Then one day the app crashes.

Your nervous system is a GPS. If you’re lost emotionally, it’s signalling you. You just haven’t asked it the right way.

This matters because emotional avoidance doesn’t just feel bad, it erodes clarity, drains energy and hijacks attention without telling you clearly why.

By pairing your bodily awareness with something that listens back, you’re replacing vague discomfort with real insight.

And that’s where AI, when used thoughtfully, becomes a somatic mirror for your feelings…

Let me show you how.

“You can’t solve a feeling with a thought. You must give it space to stretch.”

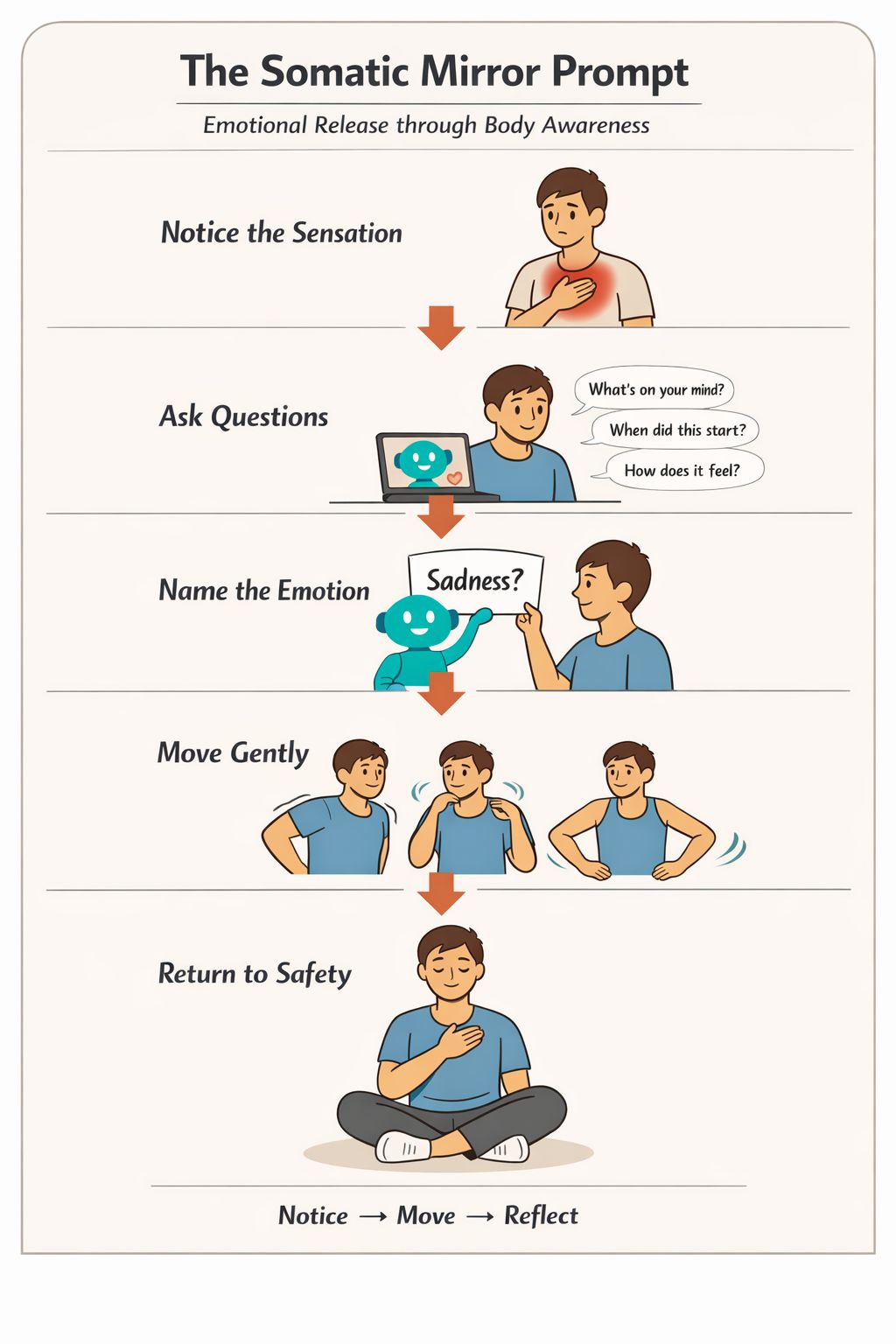

Prompt Corner: The Somatic Mirror Prompt

Purpose: To get AI to act as a compassionate somatic guide that translates your physical sensations into emotional insight and then gives you movement prompts to help you release it.

[Start prompt]

Act as my compassionate somatic guide.

I’m noticing the following physical sensation in my body:

[Describe the sensation clearly. Include location, texture, intensity, and whether it feels sharp, dull, heavy, tight, restless, warm, cold, etc.]

Based on this sensation, please:

1. Slow the moment

Ask me three gentle, non-leading questions that help connect this sensation to an underlying emotional state or unmet need.

2. Name the likely emotional signal

Reflect back what emotion or state this sensation may be signalling, without diagnosing or pathologising. Use tentative language, not certainty.

3. Offer a tailored 5-minute movement reset

Suggest a short, safe sequence using simple movements (stretching, shaking, grounding, opening or softening) that fits the emotion and body area involved.

4. Return me to safety

End with one short grounding cue using breath, posture, or sensory orientation to help my nervous system settle.

Keep everything gentle, practical, and human.

This is emotional support and awareness, not medical treatment.[End prompt]

Sample Output:

Coach: “What does this sensation feel like; warm, heavy, or constricted?”

Coach: “When did it first show up today?”

Coach: “What thoughts were you having just before it appeared?”

Movement: “Gentle shoulder rolls → heel drops → chest openers”

Grounding: “30 seconds of breath with eyes open”

“Avoided feelings do not vanish; they wait in the muscles, rehearsing their return.”

Here’s a visual of the Somatic Mirror Prompt:

Optional Weekly Pattern Add-On

Based on what I’ve shared recently, do you notice any repeating body sensations or emotional states?

What might that pattern be asking for more of right now: rest, expression, boundaries, or support?Why This Prompt Works 🧠🫀

This prompt works because it follows your body’s order of operations.

It doesn’t ask you to explain yourself.

It asks you to sense first.

You start with raw sensation, not interpretation.

AI slows the moment by asking gentle, clarifying questions.

Movement replaces rumination, giving emotion somewhere to go.

That sequence matters.

Neuroscience shows that naming bodily states reduces threat responses, while small, contained movements help the nervous system discharge tension without tipping into overwhelm.

The prompt uses both, in the right order.

It also avoids the common trap of emotional optimisation.

Nothing is fixed.

Nothing is rushed.

Instead, the prompt creates a safe loop: notice → move → reflect.

At a time when many people try to think their way out of feeling stuck, this approach does something quietly effective: it lets the body finish the sentence the mind keeps interrupting.

You’re not chasing insight.

You’re letting it surface.

AI Tool Spotlight: Reflectly

Reflectly is an AI journaling app that listens , really listens, to your descriptions of physical sensation and emotional nuance. It uses sentiment analysis and pattern detection to highlight recurring themes, emotional triggers and bodily tension points you might be skirting around.

Imagine describing a sensation like “a cold, heavy knot in my stomach” and having the app not only notice the word choice but relate it back to your expressed mood and behaviour over time.

That’s the power here.

Reflectly doesn’t give generic pep talks. It surfaces patterns you don’t see, making it easier to spot what your body is signalling before it becomes a headline emotion.

It’s like a thoughtful mirror that watches for cycles and shifts, not just moments.

Use Reflectly alongside the Somatic Mirror Prompt to track your sensations, emotional language and movement results over days and weeks.

That way, you don’t just move once, you notice what shifts over time and build a body‑wise awareness that feels grounded, subtle and human.

“AI doesn’t need to feel for you, only help you feel fully.”

What You Learned Today

✅ Your body is constantly signalling emotions your mind avoids.

✅ Describing physical sensations clearly unlocks emotional insight.

✅ Pairing AI with somatic awareness helps externalise what’s internal.

✅ Movement, even 5 minutes, changes your nervous system tone.

✅ A reflective loop amplifies awareness without overwhelming it.

Emotions aren’t puzzles to be solved, they’re currents to be felt and processed. By using the Somatic Mirror Method, you give your nervous system a voice and your mind a map.

You’re not turning your body into data.

You’re simply learning its language.

What sensation has been whispering at you today and what might it want you to do about it? 🧠 💬

“The first movement is not of the body, but of permission.”

⚠️ Medical Disclaimer

The information provided in this content is for educational and informational purposes only and is not intended as a substitute for professional medical, psychological, or mental health advice, diagnosis, or treatment. Always seek the guidance of a qualified healthcare provider or licensed mental health professional with any questions you may have regarding a medical or psychological condition.

Never disregard professional advice or delay seeking it because of information provided by an AI or through this content. If you are experiencing a medical emergency or mental health crisis, please contact emergency services or a local crisis line immediately. AI tools are not a replacement for clinical judgement. Use them mindfully, and always prioritise human care when in doubt.

Final Thoughts 🌿✨

Most self-growth strategies start in the mind, but emotions begin in the body. This approach flips the sequence: feel first, then understand.

When you bring AI into that loop, not as a therapist, but as a mirror, you’re not outsourcing wisdom, you’re amplifying awareness.

Start small.

Start honest.

Let your nervous system speak in its native tongue: sensation.

Because sometimes the smartest thing you can do, is move. 🌀

“When sensation are your teacher, every ache is a doorway.”

Go from AI overwhelmed to AI savvy professional

AI will eliminate 300 million jobs in the next 5 years.

Yours doesn't have to be one of them.

Here's how to future-proof your career:

Join the Superhuman AI newsletter - read by 1M+ professionals

Learn AI skills in 3 mins a day

Become the AI expert on your team

📸 AI IMAGE GALLERY 📸

AI Art: Fantastical Fruitlings

In velvet orchards where silence coos, the baby fruits sleep in honeyed dews,

tiny lives wrapped in chlorophyll cribs, suckling sap that drips on tiny bibs, like small delicate rainbow lambs, they dream of food, of laughter, of jam.

Want to create these images yourself?

Go to Midjourney and plug this prompt into the editor. Once the image is generated you can use the new video feature to animate it.

Medium close-up, ultra-realistic front-facing dragon fruit with a fully visible human baby face seamlessly merged into the fruit and all the same colour pallet. The eyes are wide, expressive, slightly watery, looking forward as if asking people for help. The mouth is clearly visible and slightly open, human lips detailed and natural, conveying a pleading expression. Small human-like hands can be visible near the face in a gentle "help me" gesture. Perfect face symmetry, mouth and eyes fully in frame. Clean background, professional studio lighting, ultra-sharp focus, 8K macro realism, no blur, no text, no watermark. --ar 16:9Original digital prompt by @shanawar3632

Artwork + prompt modified by WellWired. Poem created by Cedric The AI Monk.

Bianca the Banana |  David the Dragonfruit |

Kerry the Kiwi |  Oscar the Orange |

👊🏽 STAY WELL 👊🏽

Cedric The AI Monk | That’s a wrap on today’s Well Wired edition, where your racing thoughts stopped shouting and your body finally got a word in. Today you didn’t analyse your feelings; you let them move through you. One sensation noticed, one small movement made, one quiet release through your nervous system. This was somatic intelligence meeting AI awareness 🧠🫀 |

Want more practices that blend body wisdom with thoughtful tech, AI‑guided prompts that respect your nervous system, or tools that help you grow without pushing harder?

Find me on X @cedricchenefront or @wellwireddaily, where movement, meaning and modern intelligence learn to work together.

Cedric the AI Monk: helping bodies speak, minds soften and technology stay in its place, one conscious pause at a time.

Ps. Well Wired is Created by Humans, Constructed With AI 👱🤖

🤣 AI MEME OF THE DAY 🤣

Did we do WELL? Do you feel WIRED?I need a small favour because your opinion helps me craft a newsletter you love... |

Disclaimer: None of the content in this newsletter is medical or mental health advice. The content of this newsletter is strictly for information purposes only. The information and eLearning courses provided by Well Wired are not designed as a treatment for individuals experiencing a medical or mental health condition. Nothing in this newsletter should be viewed as a substitute for professional advice (including, without limitation, medical or mental health advice). Well Wired has to the best of its knowledge and belief provided information that it considers accurate, but makes no representation and takes no responsibility as to the accuracy or completeness of any information in this newsletter. Well Wired disclaims to the maximum extent permissible by law any liability for any loss or damage however caused, arising as a result of any user relying on the information in this newsletter.